Moving Shot Stabilization in Blender

Blender's 2D video stabilization is good, but it has some omissions:

It can't handle stabilization of pans or any kind of moving shot. When the stabilization is enabled, the stabilizer will in effect pin the track markers to the screen and translate the input image in such a way as to keep them in the same position. That translation is destructive. If you add, for example, a transform strip on top of stabilizaed footage, you can't counteract any of the translation done by the 2D stabilization.

It can't just filter the high-frequency shakes.

1. Overview

When I filmed Parvaz[a] at Chaharshanbe Suri this year, I ended up with footage that was reasonably stable (thanks to the monopod for less), but I vastly prefer watching dance being performed on near absolutely stable video. I expect the dancers to dance around, not the image itself.

So I set out to fill this void in Blender's feature set.

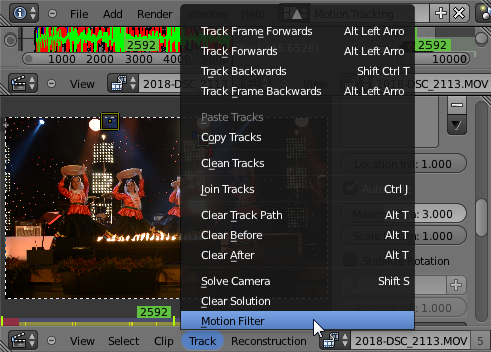

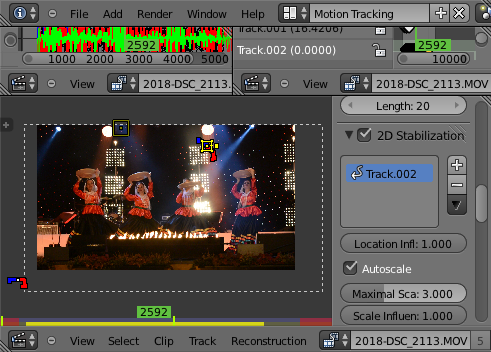

The end result was that I implemented the Moving Shot Stabilization algorithm using Blender's Python interface. You select a track in the motion tracking screen, click on Track > Motion Filter, select the degree of stabilization, and off you go. It will output a new track that you can use for 2D stabilization.

2. Installation

This script is compatible with Blender 2.69 and 2.72. I have not tested on any other versions - maybe it works, maybe not.

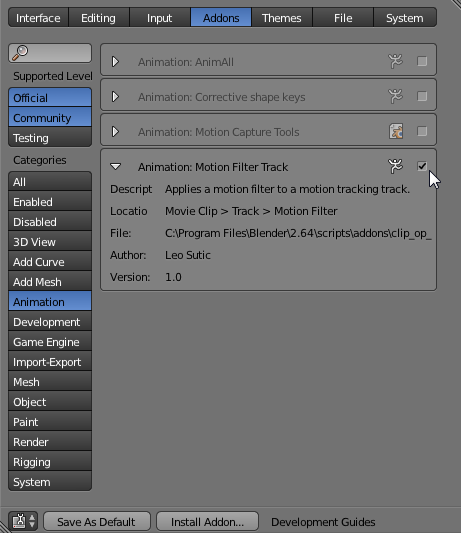

Unzip and drop it in your Blender addons/ folder. Then go to the user preferences and enable it. It's under the "Animation" category.

3. Usage

Do the motion tracking. Right now, the code will only use the first selected input track, so select the track you want to filter and click on Track and then Motion Filter.

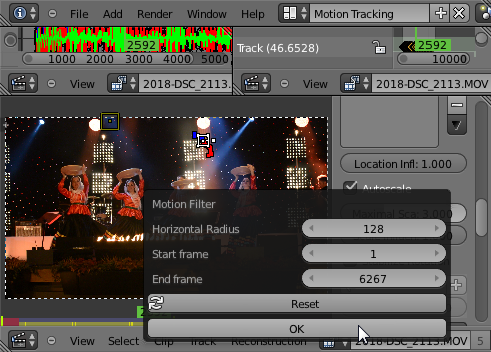

Enter values for radius, start and end frame.

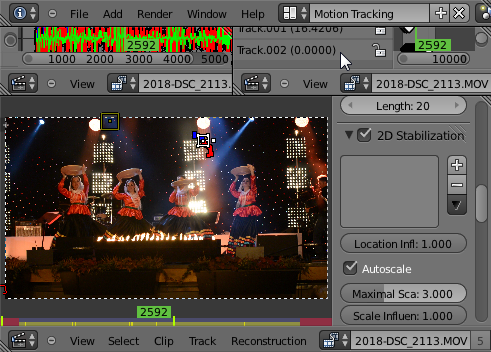

Since I haven't been able to figure out how to create a new track and not have it hidden, you may have to press Alt+H to have the new track appear in the list.

Use the new track for 2D stabilization. Due to the way the filter works, the autoscale will not magnify the footage any more than text(width)/(text(width) - 2*text(radius)) . So, for example, if you have full HD source footage (1920 pixels across) and you use the default radius setting, then the maximum required scaling will be text(1920)/(text(1920) - 2*text(128)) ~~ 1.15 .

4. Future

I hope to add different types of filters, as well as to smoothly handle more than one track.