Multi-Camera Sync

When filming Layali Dance Academy's Student Shows, I use two cameras. One to film the whole stage, and one for close-ups. This means that I end up with a set of clips that must be synced. Out of curiosity, I decided to whip up an Ant[a] task to synchronize clips based on their audio tracks.

1. The Problem

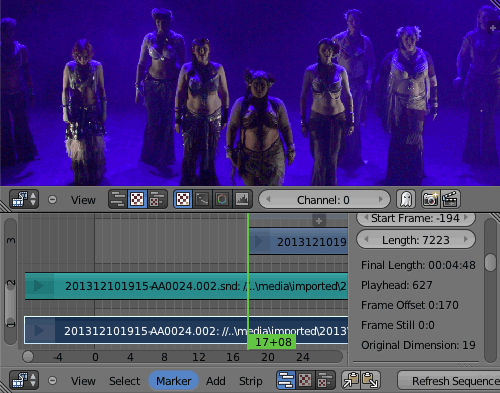

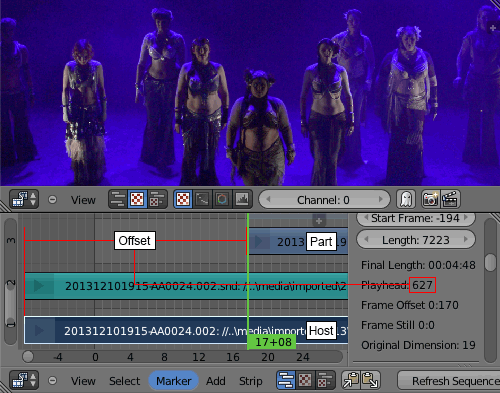

When assembling multiple clips in Blender's Video Sequence Editor, we want to position them in such a way as to have the footage synchronized between them.

In particular, what we want to find out is the offset from the start of the longer, or "host" clip, where the shorter or "part" clip should start. In the picture below, the offset is 627 frames.

2. Overview of Process

The theory behind this is simple: Load the audio track we want to match and the track we want to match it against. Then normalize the volume for both tracks so that we avoid differences caused by differences in microphone gain. Then find the position of the first track in the second that results in the least mean square difference between them.

2.1. Example

Here we have two waveforms. They are quite different, and the error (light red) is substantial.

However, if we shift the red waveform one sample to the right (corresponding to increasing the offset by one), the error drops and we have quite a good fit.

2.2. The Sources

In the example here, the "host" track was recorded with a Canon XF100[b], and the "part" clip with a Nikon D3200[c]. In both cases, the internal microphone was used with automatic gain.

3. Loading the Audio

When loading the audio, I use FFmpeg to extract it from the clip, convert it to mono and resample it to 48kHz, 16 bit signed, big endian. This ensures a uniform input.

Immediately, the 48kHz samples are squared to get a volume value, and downsampled by averaging to 16 times the framerate. For 48kHz sound being used to sync a 25fps video, that means a downsampling to 25 times 16 or 400 Hz, or a 120:1 downsampling. This rate, 120:1, is called the base rate.

int BASE_RATE_PER_FRAME = 16;

int baseRate = (int) (sampleRate / (BASE_RATE_PER_FRAME * frameRate));

while (true) { // Exit by EOFException

float d = 0;

for (int j = 0; j < baseRate; ++j) {

// Read a single 16-bit signed sample

float s = dis.readShort ();

// Square it to get the volume and

// add to accumulator

d += s * s;

}

// Get the average

d /= baseRate;

// Store it

loadedData.add (d);

}4. Normalizing and Building the Multi-resolution Sequences

Once the audio data has been loaded and the initial downsampling is complete, the data is normalized by scaling so that the average volume is equal to 1.0. This is simply done by dividing each sample by the average of all samples. Then the data is repeatedly downsampled 2:1 by averaging adjacent samples to get a set of samplings, each one half the size of the previous, until the length of the sampling is less than 32 samples. (The value 32 is arbitrarily chosen as the lowest meaningful resolution.)

5. Matching

Now that we have a pyramid representation of the two audio tracks, we start matching.

For each level of downsampling, the corresponding audio data is taken from the track to sync against (called the "host" track), and the track to sync (called the "part" track). Then we find the offset in the host track where the mean squared error against the part track is the least. The two figures below illustrate a bad and a good match. The top half is the part track and the lower half is the host track. As you can see, even with a good match there are errors.

The spike corresponds to the "good match" example above. As can be seen, the error at offset 627 stands out.

This is the "spike" at base rate. The minimum is at offset 10029, corresponding to video frame 626.81 which we round up to 627.

6. Scoring

Since we are matching sequences with different resolutions, the best matching offset in a downsampled sequence corresponds to multiple offsets in a higher-resolution sequence, as the sample in the downsampled sequence was created from multiple samples in the higher-resolution sequence. For example, if the matching at one sample per video frame (sixteen base rate samples per sample) gave the best offset as 100, then we'd expect the best match at base rate to be somewhere between offsets 1600 and 1615, as those samples at base rate went into the single sample at offset 100.

Therefore we maintain a "vote count" for every offset at base rate. For each matching level, we add one to the corresponding samples in base rate. Then we pick the base rate sample with the highest score.

The spike is at offset 10029 at base rate, corresponding to frame 626.81 or 627. The "broad base" is the best match at a downsampled sequence. As we see, the match at the downsamples sequence overlaps the best match, but there is also a "best" match that doesn't - the little rectangle just to the right of the spike.

The spike is at offset 10029 is still there, but as we can see, the best match at base rate need not be the best match at other resolutions.

7. Performance

The sync code was run on seven "part" clips from the first act of the first day of Layali Dance Academy's Student Shows for Autumn 2013, matching them against four "host" clips. In every case the match was frame-perfect.

8. Endnote: A Comment On Blender's Stance

The Blender Manual for the Multicam Selector[d] opposes the use of the soundtrack for synchronization, stating that:

We might add automatic sync feature based on global brightness of the video frames in the future. (Syncing based on the audio tracks, like most commercial applications do, isn't very clever, since the speed of sound is only around 340 metres per second and if you have one of you camera 30 meters away, which isn't uncommon, you are already 2-3 frames off. Which *is* noticeable...)

I disagree. The whole purpose of having multiple cameras is to have different video footage, which means that the global brightness is unlikely to be useful as a source of matching. The sound, however, can be assumed to be more or less the same throughout the space in which we are filming; in particular since the need for synchronization like this usually appears when filming concerts or performances. Any issues with sound propagation delay can be compensated for by adding an offset to the best sync time based on the relative distance from the primary sound source of the camera whose footage is to be positioned compared to the camera with the "master" audio track. For example, if the "master" camera is 30 meters away from the speakers and the camera whose footage we are to position is 10 meters away, then we simply subtract 0.06 s (or 1.46 frames at 25 fps) from the position of the footage, as that is the time it takes for sound to propagate the 20 meters difference in distance.