As I went around the little jogging track, I realized that I wanted to know just how much of the hill would be covered by Kistahöjden[a], the new residential area that is to be built there.

In order to do that, I had to fit the map from Kistahöjden's web site[b] onto Google Earth[c]. This turned out to be quite difficult. I pulled and stretched and rotated the image, but couldn't quite get it to line up. Finally I figured, why not just whip up some code that will compute the transform parameters for me? This is the result.

1. The Application

Here it is, for the impatient:

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

2. Instructions

The theory behind the application is that given two pairs of reference points, with one of the pair in the overlay image and one in the map, there is only one unique way to translate and rotate the overlay image so that the overlay points in the pairs coincide with the corresponding map points.

The image we will try to overlay is on this page[d]. I've tried to contact the intellectual property holders of the map, but to no avail. Since the Swedish intellectual property laws make no exception for "quoting" maps, I can't provide any illustrative images here. You should, however, be able to download the map yourself and use it in Google Earth.

2.1. Choosing Reference Points

We start by choosing the reference points. Good points are for example centers of crossings or roundabouts. Then we measure the image and map coordinates of the reference points.

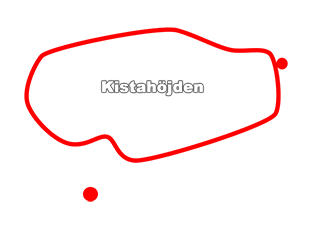

The two roundabouts are marked with red circles in the overlay.

2.2. Using the Values

With these values, we just have to add the width and height of the overlay image and we're done. To save you the hassle of copying and pasting the values:

3. Result

The algorithm can be used to create an image overlay on a Google Maps map:

var overlay = monochrome.maps.ImageOverlay.fromReferencePoints (

map, "google-maps-google-earth-image-overlay-kistahojden.png",

{

lat: 59.412744,

lon: 17.932549,

x: 270,

y: 582

},

{

lat: 59.411911,

lon: 17.941253,

x: 845,

y: 190

},

{

width: 960,

height: 683

}

);In the overlay below, I've replaced the actual map with two big red spots where the roundabouts were due to IP issues. You should, however, be able to see that the red spots match the roundabouts.

4. The Math Behind

The basic idea is to decompose the transformation from image coordinates to world ("map" or latitude / longitude) coordinates into three steps:

-

A rotation that aligns the line between the two reference points in the image with the line between the two reference points in the map.

-

A translation that moves the image so that the first reference point pair coincides.

-

A scaling that aligns the second reference point pair.

I will refer to the two reference point pairs as p_(1) and p_(2) . Each point pair has an x and a y coordinate - for the point in the image, as well as a latitude and a longitude coordinate - for the point on the map. These four numbers will be called p_(n,x) , p_(n,y) , p_(n,lat) , p_(n,lon) for n = 1,2 .

First, let's compute the deltas:

Delta_(x) = p_(2,x)-p_(1,x)

Delta_(y) = p_(2,y)-p_(1,y)

Delta_(lat) = p_(2,lat)-p_(1,lat)

Delta_(lon) = p_(2,lon)-p_(1,lon)

4.1. Rotation

We define the "width" of a degree of latitude or longitude as the distance along the surface between two parallells (lines of constant latitude) or meridians (lines of constant longitude). As we move north or south from the equator, the width of each longitude degree shrinks, and reaches zero at the poles. When computing angles in lat/long space, we need to compensate for that. Fortunately, we can compute an "average latitude per longitude ratio" for the overlay and not be far off. For a latitude lat , the ratio of the surface length of a latitude degree to longitude degree is:

lat:lon = 1:cos(lat)

That is, at the equator, one degree of latitude is as wide as one degree of longitude, but halfway to the pole, one degree of longitude is only ~70% of the width of one degree of latitude.

A suitable number to use for lat is simply the average latitude of the reference points: (p_(1,lat) + p_(2,lat)) / 2 . Once we have this correction factor we can assume that lat/long space is a cartesian grid. Finding the rotation is then easy: The angle of the line between two points relative to the positive x-axis is tan^-1(Delta_(y),Delta_(x)) , so we just subtract the resulting angle from one pair of points from the other:

theta_(c) = cos((p_(1,lat)+p_(2,lat))/2)

alpha_(map) = tan^-1(Delta_(lat), Delta_(lon)theta_(c))

alpha_(img) = tan^-1(Delta_(y),Delta_(x))

r = alpha_(img) - alpha_(map)

4.2. Translation

Finding the translation is also easy: Since we only have to satisfy the constraint that p_(1,x),p_(1,y) shall correspond to p_(1,lat),p_(1,lon) , a transformation is:

f(x,y) -> [ lon', lat' ]

lon' = x-p_(1,x)+p(1,lon)

lat' = y-p_(1,y)+p(1,lat)

4.3. Scale

Finally, we need to find the scaling factors. One for number of latitude degrees per pixel, and one for the number of longitude degrees per pixel: s_(lat) and s_(lon) . This turned out to be the hardest part, because we have four cases - three, if we ignore the degenerate case. It is obvious that if Delta_(x) and Delta_(y) are zero, which implies that Delta_(lat) and Delta_(lon) also are zero, then no meaningful scale value can be computed, as we then only have a single reference point. However, it is perfectly possible for one of each pair to be zero. This gives us two more cases. We will, however, start with the last case: when all four deltas are non-zero.

Imagine standing at p_(1) , on an image that is rotated and translated. You are looking down the positive x-axis of the image. Then you walk Delta_(x) pixels forward. As you do so, you move s_(lon)cos(r)Delta_(x) degrees of longitude and s_(lat)sin(r)Delta_(x) degrees of latitude. Then you turn 90 degrees and walk along the y-axis of the image. You then move s_(lon)cos(r + pi/2)Delta_(y) degrees of longitude and s_(lat)sin(r + pi/2)Delta_(y) degrees of latitude.

In total, then, you have moved s_(lon)cos(r)Delta_(x) + s_(lon)cos(r + pi/2)Delta_(y) degrees of longitude and s_(lat)sin(r)Delta_(x) + s_(lat)sin(r + pi/2)Delta_(y) degrees of latitude. But if our values for s_(lat) and s_(lon) are correct, these distances along the latitude and longitude axes should be equal to Delta_(lat) and Delta_(lon) ! We therefore set:

r_(2) = r+pi/2

Delta_(lon) = s_(lon)cos(r)Delta_(x) + s_(lon)cos(r_(2))Delta_(y)

Delta_(lat) = s_(lat)sin(r)Delta_(x) + s_(lat)sin(r_(2))Delta_(y)

...and solve for s_(lat) and s_(lon) :

s_(lon) = Delta_(lon) / (cos(r)Delta_(x) + cos(r_(2))Delta_(y))

s_(lat) = Delta_(lat) / (sin(r)Delta_(x) + sin(r_(2))Delta_(y))

4.3.1. The Zero-delta Cases

For the cases when Delta_(lat) or Delta_(lon) are zero, we have to rely on the average ratio of latitude to longitude width. For these two cases, then, we compute the scale factor for the non-zero delta, and multiply or divide as needed with the average latitude per longitude ratio to get the other scale factor.

4.4. The Transformation

Putting it all together, we find that the transformation is:

f([x,y]) -> [lat' , lon']

delta_(x) = x-p_(1,x)

delta_(y) = y-p_(1,y)

lat' = p_(1,lat) + sin(r)*delta_(x)*s_(lat) + sin(r_(2))*delta_(y)*s_(lat)

lon' = p_(1,lon) + cos(r)*delta_(x)*s_(lon) + cos(r_(2))*delta_(y)*s_(lon)

We can now compute the latitude and longitude of any point in the image. Placing the image in the map is now trivial: We compute the latitude and longitude for the lower left corner, use the fromLatLngToDivPixel method to figure out screen-space coordinates, apply CSS width and height values to scale and a CSS transformation to rotate around the lower left corner.